[ad_1]

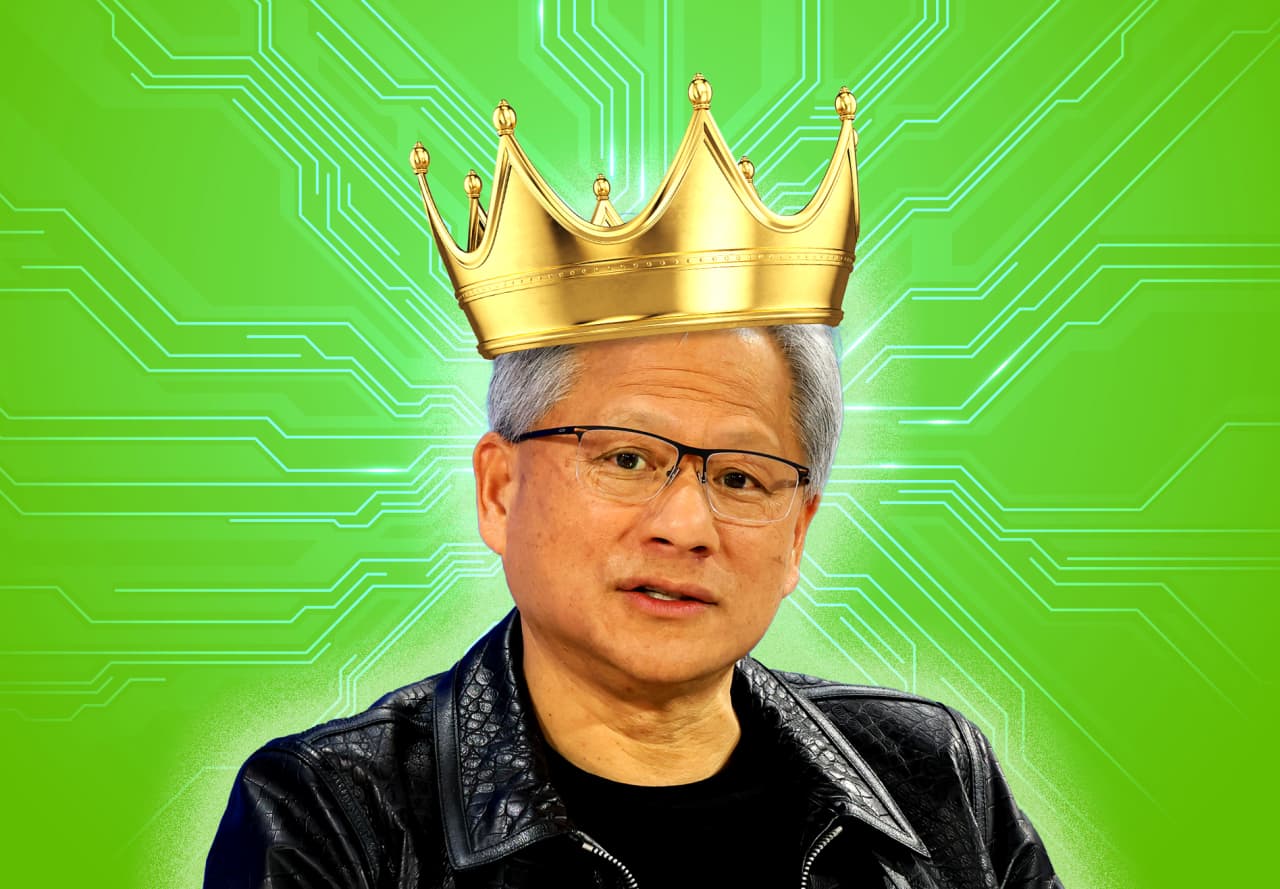

This year’s artificial-intelligence boom turned the landscape of the semiconductor industry on its head, elevating Nvidia Corp. as the new king of U.S. chip companies — and putting more pressure on the newly crowned company for the year ahead.

Intel Corp.

INTC,

which had long been the No. 1 chip maker in the U.S., first lost its global crown as biggest chip manufacturer to TSMC

2330,

several years ago. Now, Wall Street analysts estimate that Nvidia’s

NVDA,

annual revenue for its current calendar year will outpace Intel’s for the first time, making it No. 1 in the U.S. Intel is projected to see 2023 revenue of $53.9 billion, while Nvidia’s projected revenue for calendar 2023 is $56.2 billion, according to FactSet.

Even more spectacular are the projections for Nvidia’s calendar 2024: Analysts forecast revenue of $89.2 billion, a surge of 59% from 2023, and about three times higher than 2022. In contrast, Intel’s 2024 revenue is forecast to grow 13.3% to $61.1 billion. (Nvidia’s fiscal year ends at the end of January. FactSet’s data includes pro-forma estimates for calendar years.)

“It has coalesced into primarily an Nvidia-controlled market,” said Karl Freund, principal analyst at Cambrian AI Research. “Because Nvidia is capturing market share that didn’t even exist two years ago, before ChatGPT and large language models….They doubled their share of the data-center market. In 40 years, I have never seen such a dynamic in the marketplace.”

Nvidia has become the king of a sector that is adjacent to the core-processor arena dominated by Intel. Nvidia’s graphics chips, used to accelerate AI applications, reignited the data-center market with a new dynamic for Wall Street to watch.

Intel has long dominated the overall server market with its Xeon central processor unit (CPU) family, which are the heart of computer servers, just as CPUs are also the brain chips of personal computers. Five years ago, Advanced Micro Devices Inc.

AMD,

Intel’s rival in PC chips, re-entered the lucrative server market after a multi-year absence, and AMD has since carved out a 23% share of the server market, according to Mercury Research, though Intel still dominates with a 76.7% share.

Graphics chips in the data center

Nowadays, however, the data-center story is all about graphics processing units (GPUs), and Nvidia’s have become favored for AI applications. GPU sales are growing at a far faster pace than the core server CPU chips.

Also read: Nvidia’s stock dubbed top pick for 2024 after monster 2023, ‘no need to overthink this.’

Nvidia was basically the entire data-center market in the third quarter, selling about $11.1 billion in chips, accompanying cards and other related hardware, according to Mercury Research, which has tracked the GPU market since 2019. The company had a stunning 99.7% share of GPU systems in the data center, excluding any devices for networking, according to Dean McCarron, Mercury’s president. The remaining 0.3% was split between Intel and AMD.

Put another way: “It’s Nvidia and everyone else,” said Stacy Rasgon, a Bernstein Research analyst.

Intel is fighting back now, seeking to reinvigorate growth in data centers and PCs, which have both been in decline after a huge boom in spending on information technology and PCs during the pandemic. This month, Intel unveiled new families of chips for both servers and PCs, designed to accelerate AI locally on the devices themselves, which could also take some of the AI compute load out of the data center.

“We are driving it into every aspect of the applications, but also every device, in the data center, the cloud, the edge of the PC as well,” Intel CEO Pat Gelsinger said at the company’s New York event earlier this month.

While AI and high-performance chips are coming together to create the next generation of computing, Gelsinger said it’s also important to consider the power consumption of these technologies. “When we think about this, we also have to do it in a sustainable way. Are we going to dedicate a third, a half of all the Earth’s energy to these computing technologies? No, they must be sustainable.”

Meanwhile, AMD is directly going after both the hot GPU market and the PC market. It, too, had a big product launch this month, unveiling a new family of GPUs that were well-received on Wall Street, along with new processors for the data center and PCs. It forecast it will sell at least $2 billion in AI GPUs in their first year on the market, in a big challenge to Nvidia.

Also see: AMD’s new products represent first real threat to Nvidia’s AI dominance.

That forecast “is fine for AMD,” according to Rasgon, but it would amount to “a rounding error for Nvidia.”

“If Nvidia does $50 billion, it will be disappointing,” he added.

But AMD CEO Lisa Su might have taken a conservative approach with her forecast for the new MI300X chip family, according to Daniel Newman, principal analyst and founding partner at Futurum Research.

“That is probably a fraction of what she has seen out there,” he said. “She is starting to see a robust market for GPUs that are not Nvidia…We need competition, we need supply.” He noted that it is early days and the window is still open for new developments in building AI ecosystems.

Cambrian’s Freund noted that it took AMD about four to five years to gain 20% of the data-center CPU market, making Nvidia’s stunning growth in GPUs for the data center even more remarkable.

“AI, and in particularly data-center GPU-based AI, has resulted in the largest and most rapid changes in the history of the GPU market,” said McCarron of Mercury, in an email. “[AI] is clearly impacting conventional server CPUs as well, though the long-term impacts on CPUs still remain to be seen, given how new the recent increase in AI activity is.”

The ARMs race

Another development that will further shape the computing hardware landscape is the rise of a competitive architecture to x86, known as reduced instruction set computing (RISC). In the past, RISC has mostly made inroads in the computing landscape in mobile phones, tablets and embedded systems dedicated to a single task, through the chip designs of ARM Holdings Plc

ARM,

and Qualcomm Inc.

QCOM,

Nvidia tried to buy ARM for $40 billion last year, but the deal did not win regulatory approval. Instead, ARM went public earlier this year, and it has been promoting its architecture as a low-power-consuming option for AI applications. Nvidia has worked for years with ARM. Its ARM-based CPU called Grace, which is paired with its Hopper GPU in the “Grace-Hopper” AI accelerator, is used in high-performance servers and supercomputers. But these chips are still often paired with x86 CPUs from Intel or AMD in systems, noted Kevin Krewell, an analyst at Tirias Research.

“The ARM architecture has power-efficiency advantages over x86 due to a more modern instruction set, simpler CPU core designs and less legacy overhead,” Krewell said in an email. “The x86 processors can close the gap between ARM in power and core counts. That said, there’s no limit to running applications on the ARM architecture other than x86 legacy software.”

Until recently, ARM RISC-based systems have only had a fractional share of the server market. But now an open-source version of RISC, albeit about 10 years old, called RISC-V, is capturing the attention of both big internet and social-media companies, as well as startups. Power consumption has become a major issue in data centers, and AI accelerators use incredible amounts of energy, so companies are looking for alternatives to save on power usage.

Estimates for ARM’s share of the data center vary slightly, ranging from about 8%, according to Mercury Research, to about 10% according to IDC. ARM’s growing presence “is not necessarily trivial anymore,” Rasgon said.

“ARM CPUs are gaining share rapidly, but most of these are in-house CPUs (e.g. Amazon’s Graviton) rather than products sold on the open market,” McCarron said. Amazon’s

AMZN,

Graviton processor family, first offered in 2018, is optimized to run cloud workloads at Amazon’s Web Services business. Alphabet Inc.

GOOG,

GOOGL,

also is developing its own custom ARM-based CPUs, codenamed Maple and Cypress, for use in its Google Cloud business according to a report earlier this year by the Information.

“Google has an ARM CPU, Microsoft has an ARM CPU, everyone has an ARM CPU,” said Freund. “In three years, I think everyone will also have a RISC-V CPU….It it is much more flexible than an ARM.”

In addition, some AI chip and system startups are designing around RISC-V, such as Tenstorrent Inc., a startup co-founded by well-regarded chip designer Jim Keller, who has also worked at AMD, Apple Inc.

AAPL,

Tesla Inc.

TSLA,

and Intel.

See: These chip startups hope to challenge Nvidia but it will take some time.

Opportunity for the AI PC

Like Intel, Qualcomm has also launched an entire product line around the personal computer, a brand-new endeavor for the company best known for its mobile processors. It cited the opportunity and need to bring AI processing to local devices, or the so-called edge.

In October, it said it is entering the PC business, dominated by Intel’s x86 architecture, with its own version of the ARM architecture called Snapdragon X Elite platform. It has designed its new processors specifically for the PC market, where it said its lower power consumption and far faster processing are going to be a huge hit with business users and consumers, especially those doing AI applications.

“We have had a legacy of coming in from a point where power is super important,” said Kedar Kondap, Qualcomm’s senior vice president and general manager of compute and gaming, in a recent interview. “We feel like we can leverage that legacy and bring it into PCs. PCs haven’t seen innovation for a while.”

Software could be an issue, but Qualcomm has also partnered with Microsoft for emulation software, and it trotted out many PC vendors, with plans for its PCs to be ready to tackle computing and AI challenges in the second half of 2024.

“When you run stuff on a device, it is secure, faster, cheaper, because every search today is faster. Where the future of AI is headed, it will be on the device,” Kondap said. Indeed, at its chip launch earlier in this month, Intel quoted Boston Consulting Group, which forecast that by 2028, AI-capable PCs will comprise 80% of the PC market..

All these different changes in products will bring new challenges to leaders like Nvidia and Intel in their respective arenas. Investors are also slightly nervous about Nvidia’s ability to keep up its current growth pace, but last quarter Nvidia talked about new and expanding markets, including countries and governments with complex regulatory requirements.

“It’s a fun market,” Freund said.

And investors should be prepared for more technology shifts in the year ahead, with more competition and new entrants poised to take some share — even if it starts out small — away from the leaders.

[ad_2]

Source link