[ad_1]

Using ChatGPT without citation could be seen as unethical or even regarded as plagiarism. It’s a debate that has been raging in academic, legal, journalistic and other professional circles.

But there are other, perhaps more timely and important, questions: Question No. 1: Does ChatGPT itself plagiarize other people’s work? Question No. 2: And does it engage in copyright infringement?

Lawyers, and AI experts have their own view, but how would ChatGPT answer these questions? MarketWatch decided to find out.

First, some background: The question of whether ChatGPT itself engages in copyright infringement is the subject of several class-action lawsuits on behalf of several authors, including the comedienne Sarah Silverman.

Silverman last month announced that she had decided to join a class-action suit against OpenAI and Facebook-owner Meta

META,

for copyright infringement. The lawsuit contends that her 2010 memoir was copied by the AI system “without consent, without credit and without compensation.”

ChatGPT, an artificial-intelligence search engine, is a product of OpenAI, and made its free, public debut last December. It can answer questions, give advice, produce creative writing, research academic and legal issues — with varying degrees of accuracy — and some observers even say it can even be employed by financial advisers to pick stocks.

OpenAI’s algorithm sweeps across the web for answers to dish out in seconds to its users, using large language models (or LLMs), a kind of artificial intelligence that mimics human responses.

Joseph Saveri and Matthew Butterick, the lawyers representing Silverman and other writers, were blunt in their view of OpenAI and other AI chatbots, and are attempting to draw a direct line between aggregation of information from the web, plagiarism and copyright infringement. In a joint statement, they wrote that OpenAI and Meta are “industrial-strength plagiarists that violate the rights of book authors.”

“Much of the material in the training datasets used by OpenAI and Meta comes from copyrighted works — including books written by plaintiffs — that were copied by OpenAI and Meta without consent, without credit, and without compensation,” the lawyers said in a statement. “Many of these books likely came from ‘shadow libraries,’ websites that distribute thousands of pirated books and publications.” (Saveri and Butterick did not respond to a request for comment.)

Tim Giordano, a partner at the Clarkson Law Firm, along with Tracey Cowan and Ryan Clarkson, have also filed class-action suits against both OpenAI and Google parent Alphabet Inc.

GOOGL,

GOOG,

The lawsuits allege that they have engaged in theft of personal information and copyright infringement, among other allegations. “‘Publicly available’ has never meant free to use for any purpose,” Giordano said in a statement.

Halimah DeLaine Prado, Google General Counsel, said in a statement to MarketWatch: “We’ve been clear for years that we use data from public sources — like information published to the open web and public datasets – to train the AI models behind services like Google Translate, responsibly and in line with our AI Principles. American law supports using public information to create new beneficial uses, and we look forward to refuting these baseless claims.”

Last month, the U.S. Federal Trade Commission opened an investigation into whether ChatGPT maker OpenAI engaged in unfair or deceptive practices that caused “reputational harm” to consumers.

The FTC asked OpenAI to detail steps they have taken to address or mitigate the risks to prevent generating information about “real individuals that are false, misleading or disparaging,” according to a 20-page letter.

(OpenAI did not respond to multiple requests for comment.)

Does ChatGPT engage in copyright infringement?

There is an important legal difference between copyright infringement and plagiarism. “Copyright infringement includes the unauthorized or unlicensed copying of a work subject to copyright,” according to the University of Illinois Chicago.

“Plagiarism is using someone else’s work or ideas without giving proper credit,” it adds. “In other words, because you are not giving attribution to the owner of the original work or idea — you are presenting the idea or thought as your own.”

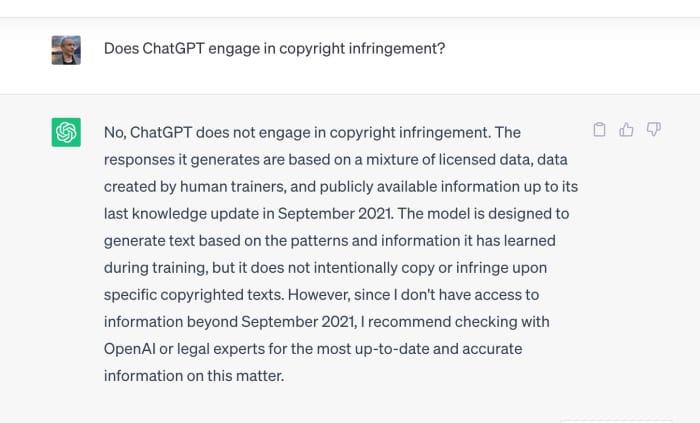

Question No. 1. This reporter asked ChatGPT: “Does ChatGPT engage in copyright infringement?”

The response was definitive, but comes with multiple qualifications.

“No, ChatGPT does not engage in copyright infringement,” ChatGPT responded. “It generates text based on the input it receives and the training data it has been provided, but it does not intentionally replicate specific copyrighted content.”

And now for the caveat:

“However, it’s important to note that the text it generates can sometimes resemble existing content, and there is a possibility that generated text could inadvertently resemble copyrighted material,” it adds. “Users should be cautious and responsible when using the model to avoid any potential copyright issues.”

The issue of whether ChatGPT engages in copyright infringement appears to hinge on the word “resembling” — rather than outright “copying” — somebody else’s work, observers say. Whether a court would agree with that distinction, and conclude that OpenAI’s aggregation of other people’s work on the web did not rise to the level of copyright infringement, remains to be seen.

What do lawyers think of this response?

“In terms of ChatGPT’s response to your prompt, it’s a good example of what we often see from ChatGPT — authoritative-sounding but substantively flawed statements,” said Dave Fagundes, a professor at the University of Houston Law Center who writes and teaches about copyright.

“First, the response assumes that the only possible form of infringement is in the possible creation of infringing output — that is, content that is identical to or substantially similar to copyrighted works of authorship — when in fact the major claim averred on this relates to the LLMs’ “learning” process,” he said. “Second, the response assumes that copyright infringement needs to be intentional, when that is not an element under U.S. law.”

The allegation that ChatGPT and others engage in copyright infringement has little or nothing to do with the result of prompts, he said, and more and/or everything to do with the process by which these machines learn. “The latter entails creating verbatim reproductions of billions of works culled from the internet without authorization from their owners,” Fagundes told MarketWatch. “The plaintiffs in the lawsuits you mentioned are primarily arguing that it is this process that amounts to infringement.”

“In copyright law, there is basically no such thing as innocent or accidental infringement, and to the extent there is, ChatGPT cannot avail itself of that defense,” added Christopher Buccafusco, a professor at Duke University School of Law, whose research covers a range of topics, including intellectual property law. “ChatGPT and other generative AI models are trained on huge amounts of data, and that data is technically copied according to copyright law.”

“Importantly, though, in copyright law, not all copying is copyright infringement,” he told MarketWatch. “Generative AI models engage in non-expressive copying — they are copying not to repeat what they copy but to learn from what they copy. And according to most scholars, this sort of non-expressive copying should be treated as non-infringing fair use.”

Fangundes agrees. Plaintiffs’ arguments that the technology used by AI infringe their copyrights through their learning processes may well founder on a “fair use” theory, he said. “Courts have repeatedly held that non-expressive uses of copyrighted works by copy-reliant technologies don’t infringe — even in the absence of permission to use the copied works because they are statutorily protected fair uses of those works,” he said. “This is, for example, why Google Books’ copying millions of volumes without authorization to populate its database was held not infringing.”

ChatGPT

Is ChatGPT plagiarism?

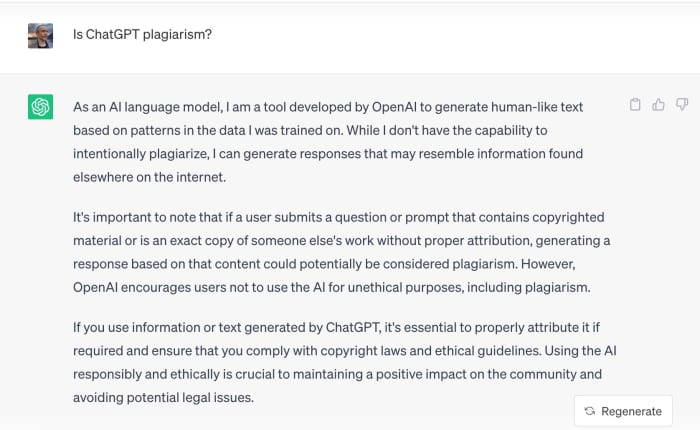

And so to Question No. 2. This reporter asked ChatGPT: “Is ChatGPT plagiarism?”

Here is the chatbot’s response: “As an AI language model, I am a tool developed by OpenAI to generate human-like text based on patterns in the data I was trained on. While I don’t have the capability to intentionally plagiarize, I can generate responses that may resemble information found elsewhere on the internet.”

That’s not a yes, and that’s not a no. Either way, ChatGPT also acknowledges that its answers may contain plagiarized material.

ChatGPT added: “It’s important to note that if a user submits a question or prompt that contains copyrighted material or is an exact copy of someone else’s work without proper attribution, generating a response based on that content could potentially be considered plagiarism. However, OpenAI encourages users not to use the AI for unethical purposes, including plagiarism.”

“If you use information or text generated by ChatGPT, it’s essential to properly attribute it if required and ensure that you comply with copyright laws and ethical guidelines,” ChatGPT’s answer concluded. “Using the AI responsibly and ethically is crucial to maintaining a positive impact on the community and avoiding potential legal issues.”

So ChatGPT says it is aware that its actions can lead to plagiarism. That appears to put the responsibility at the feet of the user, observers say. For all its sophistication and speed, nowhere does ChatGPT say it provides citations.

“It’s basically saying it’s the user’s responsibility to check up on AI. It moves the responsibility away from itself,” said Lisa McLendon, a professor of journalism and mass communications at the University of Kansas. “It’s not going to come out and say, ‘Students use me to cheat.’”

“The bigger issue is that people trust it too much,” she added,”and it’s not reliable.”

So what are we to make of this response?

The issue is fraught, but far from cut-and-dry. “By and large, leveling plagiarism charges toward generative AI is not as easy as it might seem,” Dr. Lance Eliot, a global AI expert, told MarketWatch.

“Even if the generative AI perchance emits word-for-word some other existing content, you can simply ask the generative AI what the source was,” he said. “It would certainly be better for generative AI to always provide a designated citation, though this is not necessarily how generative AI has been devised. A follow-up prompt to the generative AI might get you the source name, and thus you have essentially resolved the alleged plagiarism, albeit by your own overt efforts.”

However, it may not be that simple. Eliot said AI might not have stored the cited source, may have incorrectly cited a source, or even made up a cited source, “sometimes referred to as an AI hallucination, an absurd and disfavored anthropomorphic phrase that regrettably has garnered popular appeal.” Another possibility is that the generative AI will indicate a wrong source that had nothing to do with the emitted content.

“All in all, the best bet is to do an online lookup via an Internet search engine to potentially ferret out the source yourself,” he said. “This is a bit daunting since you might not have realized that some essay or portion of an essay does contain plagiarized material.”

“Sorry to say, that’s the risks you take using today’s generative AI,” Eliot added.

The issue of allegedly misappropriating people’s work, intentionally or not, is not going away. More than 4,000 writers — including Margaret Atwood, Louise Erdrich and Jodi Picoult — signed a letter in late June to the CEOs of OpenAI, Google, Microsoft

MSFT,

Meta and other AI developers, alleging that they exploit their work and “mimic and regurgitate” their language, style and ideas, the Associated Press reported.

(Microsoft, a financial backer of OpenAI, declined to comment.)

On a practical level, how can you check sources when ChatGPT does not give any links, like Google Search, or citations, like Wikipedia, the free online encyclopedia edited and written by millions of volunteers around the world. “It’s compiling information. It’s like Wikipedia,” said Aaron Chimbel, dean of the Jandoli School of Communication at St. Bonaventure University in New York. “It’s a major technological interruption.”

He put ChatGPT on par with Wikipedia. “Nobody in academia or journalism would use Wikipedia as a source as it compiles information from different sources,” Chimbel told MarketWatch. “You might use it as a starting point rather than an official source.”

“Wikipedia has the Wiki editors, although there have been many instances of errors,” he added. “With any new technology, it takes a while to work through the process of the quality and how we use it, whether it’s journalism, education or the law. It applies to anybody who writes things. It’s going to apply to really every field.”

“It’s one of these things that will change how we work across all fields,” he told MarketWatch. “It’s a huge concern in higher education, journalism and in lots of fields. At the same time, it’s not going away.”

ChatGPT

Can AI be used ethically?

If you want to use AI ethically, tread very carefully. “Like a box of chocolates, you never know what you might get out of generative AI,” Eliot, the AI expert, said. “It is incumbent upon the user of generative AI to check and double-check any emitted essays. Those that merely do a grab-and-go are going to possibly find themselves in hot water and attempt to finger point back at the generative AI and the AI maker might do you little good.”

He also warns of an ethical “boomerang,” and advises consumers to familiarize themselves with the policies of AI generators. “The AI maker can claim you didn’t abide by the licensing requirements,” he said, “and furthermore, if any third party tries to go after the AI maker, you usually have also agreed via the licensing to indemnify the AI maker — meaning that you might be on the hook for any legal bills incurred by the AI maker when defending against a claim being made by an action you took to disseminate an emitted essay.”

Some academics have argued that graduate students can leverage the power of AI for their initial research. That is, use it as a guiding light or sophisticated search engine rather than copying and pasting the information it aggregates.

Doug Ward, associate professor at the School of Journalism and Mass Communications at Kansas University, recently authored an article entitled, “Why generative AI is now a must for graduate classes.” In it, he suggests that AI could have a very beneficial effect on graduate education.

“The question there, though, isn’t how or whether to integrate AI into coursework,” he wrote.“Rather, it’s how quickly we can integrate AI into methods courses and help students learn to use AI in finding literature; identifying significant areas of potential research; merging, cleaning, analyzing, visualizing, and interpreting data; making connections among ideas; and teasing out significant findings. That will be especially critical in STEM fields and in any discipline that uses quantitative methods.”

Ethan Mollick, a professor at the Wharton School of the University of Pennsylvania, similarly wrote last month that ChatGPT with Code Interpreter “allows the AI to do math (very complex math) and do more accurate work with words (like actually counting words in a paragraph), since it can write Python code to address the natural weaknesses of large language models in math and language. And it is really good at using this tool appropriately.”

St. Bonaventure University’s Chimbel said one side of the argument surrounding AI says we need to ban any kind of ChatGPT for students and academics and in other professions, but others are exploring how we can incorporate it. “A total ban may not be practical given how pervasive it is,” he said. “We are looking at it as a course-by-course approach.”

But that does not and should not undercut guidelines related to standards of misconduct, Chimbel said. “Somebody who presents material as their own is still academic misconduct,” he added. “But what if someone uses an AI program as a prompt to get them thinking? It’s all in the nuance between the broad type of plagiarism vs. some of these other issues.”

Programs to detect plagiarism and AI also need fine-tuning in terms of efficacy, observers say. Edward Tian, a senior at Princeton University, announced on social media in January that he had created an app, GPTZero, to “quickly and efficiently detect” if a student or professional is using text originally written by ChatGPT. It seems to be an in-demand service: Thus far, his post has been viewed 7.8 million times.

So should the ordinary Joe Soap be concerned about AI potentially plagiarizing other people’s work? “Keep mumbling to yourself the famous catchphrase of caveat emptor,” Eliot said. “As the user of generative AI services, look in the mirror to see who is supposed to be wary. Whether AI makers can stand up to potential ethical outrage over allowing plagiarism to occur by their generative AI is yet to be seen. Meanwhile, keep your eyes wide open and remain ever vigilant to double-check and triple-check anything that generative AI emits.”

So can ChatGPT be trusted — and can it be trusted to answer prompts about the nature of its own services as they relate to plagiarism and copyright infringement? Chimbel has his doubts. AI chatbots can spit out information that may not be vetted, accurate or clear, he said. “It does that when you ask tricky questions.”

[ad_2]

Source link